When Legacy Code Meets Modern Load: A Deadlock Story

Recently, we encountered a persistent deadlock issue during a high-concurrency load test involving 100 simultaneous database operations. Although the database had sufficient capacity, a large portion of the operations were failing due to timeouts.

A closer inspection revealed the real culprit: lock contention inside SQL Server, caused by trigger management inside stored procedures. Specifically, our implementation used a pattern that disabled and re-enabled triggers dynamically, a seemingly harmless practice that turned out to be problematic under load.

What Went Wrong

The stored procedure in question was originally designed to operate across the entire table to correct data inconsistencies. It’s old code (part of a legacy monolith) and wasn’t built with concurrency in mind. When we adapted it for use in a modern, parallel processing context, it quickly became clear that its assumptions didn’t hold up.

The original pattern looked like this:

DISABLE TRIGGER [trigger_name] ON [table_name];

-- Update logic here

ENABLE TRIGGER [trigger_name] ON [table_name];

This approach forces SQL Server to acquire a Schema Modification (Sch-M) lock. This lock type is extremely restrictive, requiring exclusive access to the table and blocking all other operations, including simple SELECT statements that only request a Schema Stability (Sch-S) lock.

Under 100 concurrent executions, this became a breeding ground for deadlocks.

Confirming the Diagnosis

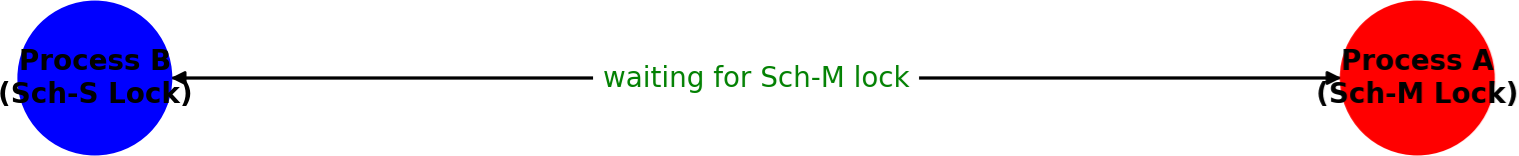

Here’s a simplified visual representation of the issue:

In this diagram, you can see the circular dependency:

- Process A is trying to acquire a Schema Modification (Sch-M) lock to disable a trigger.

- Process B is holding a Schema Stability (Sch-S) lock from a concurrent read.

Each process is waiting on the other, resulting in a deadlock. SQL Server resolves this by terminating one of the transactions (the deadlock victim) which leads to the timeout errors we observed under load.

- The deadlock graph provided the evidence:

- One process was attempting to disable a trigger, needing a Sch-M lock.

- Another process was concurrently reading from the same table with a Sch-S lock.

- Neither could proceed without the other relinquishing its lock.

Deadlock. A victim was chosen. The cycle repeated.

The Fix: Context-Aware Triggers

Rather than disabling triggers outright, a much safer and scalable alternative is to allow the trigger itself to determine when to execute. This can be achieved by checking for a session-specific context value.

- Set a context flag in the procedure

EXEC sp_set_session_context 'SKIP_TRIGGER', 1;

- In the trigger, check for that flag

IF SESSION_CONTEXT(N'SKIP_TRIGGER') IS NOT NULL

RETURN;

This approach allows the trigger to exit gracefully during known operations, avoiding the need for Sch-M locks entirely. It’s safer, more predictable under load, and dramatically reduces the chance of deadlocks.

For implementation reference, check out Microsoft’s documentation on SESSION_CONTEXT.

What We Changed

We updated the affected triggers and stored procedures to follow this pattern. After deploying the changes, our concurrent load test completed successfully and without triggering a single deadlock. Performance also improved thanks to reduced lock contention.

Why SESSION_CONTEXT Is Preferred Over CONTEXT_INFO

Before SESSION_CONTEXT was introduced in SQL Server 2016, developers used CONTEXT_INFO to pass session-scoped flags. It’s still used in legacy codebases, but it has several caveats, especially when used in systems with connection pooling.

While both SESSION_CONTEXT and CONTEXT_INFO can be used to pass session-scoped values, SESSION_CONTEXT is generally preferred:

- It supports key/value pairs instead of raw binary blobs

- Values are human-readable, making it easier to debug

- It supports up to 256 keys, each with a 128-character limit

- It’s more compatible with modern SQL Server constructs and safer for concurrent operations

This makes SESSION_CONTEXT a more maintainable and scalable choice for flag-based logic.

However, if you’re working with older systems still using CONTEXT_INFO, be aware that the context value can persist across pooled sessions. If not explicitly cleared or reset at the end of a procedure, it may affect subsequent operations that reuse the same connection.

To mitigate this, it’s a good idea to reset the context value at the end of the procedure, either by clearing it or restoring a default state. For example:

-- Clear CONTEXT_INFO at the end

SET CONTEXT_INFO 0x;

When using SESSION_CONTEXT, we can do something like this:

EXEC sp_set_session_context 'SKIP_TRIGGER', 1;

-- ... do work ...

EXEC sp_set_session_context 'SKIP_TRIGGER', 0;

This explicit setting and clearing pattern helps avoid unexpected behaviour and makes your logic easier to reason about, especially in systems where procedures are reused across varying contexts.

Safely Reusing Legacy Procedures

Repurposing older stored procedures in a modern, concurrent workload requires a bit of care. Here are some key strategies:

- Review Locking Behaviour: Understand the locks each operation acquires.

- Avoid Sch-M Where Possible: Especially in bulk or concurrent operations.

- Use Session-Aware Logic: Enable your code to conditionally skip logic.

- Simulate Load Early: Reproduce concurrency locally before it hits production

Deadlock Anatomy: A Quick Primer

A SQL Server deadlock occurs when two or more sessions block each other by holding a lock the other needs. Neither can proceed without the other releasing a lock, creating a circular dependency. SQL Server automatically chooses one process as the ‘deadlock victim’ and rolls it back to allow the others to continue.

This can happen with many combinations of locks, but is especially common when high-level locks like Sch-M are introduced into a mix of read/write operations.

Simulating a Simple Deadlock

If you’re curious or want to test monitoring and alerting setups, you can replicate a deadlock like this in a safe test environment:

-- Session 1

BEGIN TRAN;

UPDATE SomeTable SET ColumnA = 'X' WHERE Id = 1;

-- Wait here before Session 2 executes

In a new session:

-- Session 2

BEGIN TRAN;

UPDATE SomeTable SET ColumnB = 'Y' WHERE Id = 2;

In the second session:

UPDATE SomeTable SET ColumnA = 'X' WHERE Id = 1;

Back to the first session:

UPDATE SomeTable SET ColumnB = 'Y' WHERE Id = 2;

This will create a deadlock scenario between Session 1 and Session 2. Useful for demo purposes or validating monitoring tools.

Bonus: How to Monitor for Deadlocks

If you suspect you’re hitting deadlocks, this query can help you confirm it:

SELECT TOP 10 *

FROM sys.dm_os_ring_buffers

WHERE ring_buffer_type = 'RING_BUFFER_DEADLOCK'

ORDER BY timestamp DESC;

This will show you recent deadlocks, which you can then correlate with application logs or custom alerts.

Takeaway

If you’re using DISABLE TRIGGER inside SQL Server procedures and experiencing concurrency issues, it’s worth revisiting your strategy. A session-aware trigger mechanism avoids heavy locking and improves system resilience.

Share on: